Vibes. Experiences. Grandmothers.

In the last few essays, we have delved into the application of attention mechanisms and supervised fine-tuning at scale, which constitute the initial two steps towards enabling a large language model to emulate a desired behavior, such as question answering, as demonstrated by ChatGPT. We have also explored the concept of transcending human expertise and cultivating excellence-seeking behavior using reinforcement learning. Achieving this entails the agent’s capacity to learn through interactions with its environment, trial and error, and the exploration of novel pathways. Subsequently, we addressed the primary practical challenge that confronts reinforcement learning algorithms: the design of rewards in scenarios where rewards are scarce in comparison to the required effort. In this context, we will now delve into the contemporary approach employed by dialogue agents to address this challenge.

Consider Dave, an architect working on the development of a recommender system for online shopping. Dave’s team embarked on a noble mission to revolutionize the online shopping experience, envisioning a future where recommendations are tailored to individual preferences based on past transactions and browsing history. To achieve incremental progress, they set a clear objective: a 50% increase in the checkout rate within a quarter.

The team set about constructing intricate machine learning systems, meticulously analyzing users’ behavioral data to maximize the likelihood of customers adding items to their cart and swiftly proceeding to checkout. They achieved remarkable success, surpassing their quarterly targets.

However, one fine day a curious analyst conducted a survey of a thousand engaged users, asking them, “What does it feel like to shop with our app?” To their surprise, all respondents lamented that the overall shopping experience felt intrusive and cumbersome. What happened there? Hadn’t they generated millions in revenue from these very users that quarter? A painful moment of introspection revealed the forgotten guiding principle. Maximizing purely objective goals, such as striving to increase the number of items in users’ carts, had inadvertently eroded the subjective quality of the shopping experience!

In defense of Dave and his team, shopping transaction data lacks the inherent subjectivity associated with concepts like satisfaction, vibes or ambiance. How, then, does one quantify and model subjective elements like the “overall shopping experience”?

The conventional approach involves directly asking the users, such as collecting movie ratings, user experience research, surveys, satisfaction questionnaires, or objective proxies to gauge subjectivity, such as subscription rates. However, collecting experiential, subjective labels can be costly and time-consuming. Moreover, in cases of diverse user bases, collecting sufficient data becomes practically unfeasible.

Enter Reinforcement Learning from Human Feedback (RLHF), a solution that effectively bridges the gap between sparse rewards and subjectivity. In the initial step, a fine-tuned Large Language Model (LLM)-based dialogue agent is presented with a task, for instance: “Compose a letter for my friend, wishing them a great first week at work, without resorting to clichés.” The model generates multiple versions of the letter, which are then evaluated by human raters, ranking them from best to worst. This process is repeated millions of times to train a “reward model,” where the input is a piece of text, and the model assigns a numerical score indicating the overall quality of the text. In the final step, this reward model is employed to train a reinforcement learning agent (as described here), enabling it to seek excellence through millions of simulated 1:1 dialog episodes, guided by the previously trained reward model, which exclusively rewards, for example, high-quality letters crafted for friends.

In summary, this is the process by which ChatGPT and other dialogue programs operate. They progress from capturing linguistic correlations to emulating human experts and ultimately capturing the essence of high-quality writing.

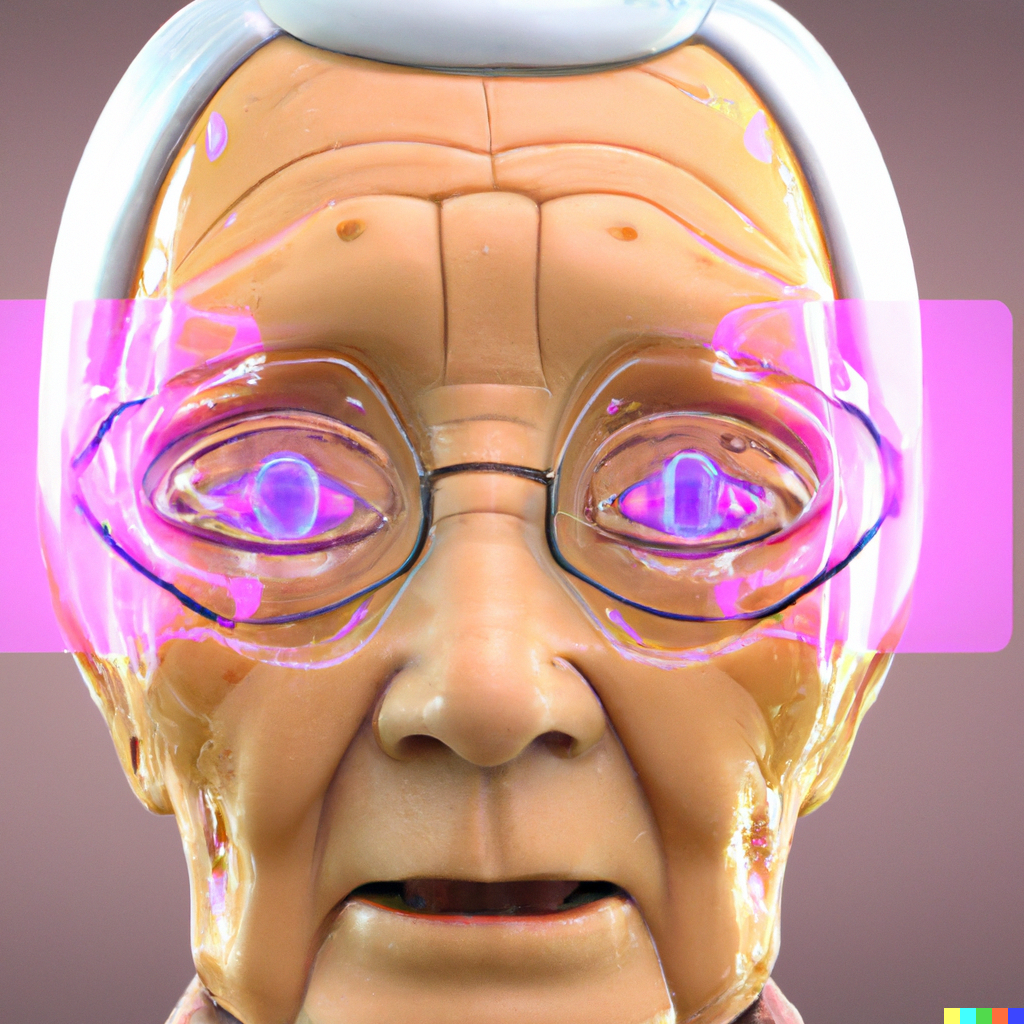

As a final note on subjectivity, humorously echoing within the AI research community is the pursuit of the “grandmother neuron”, the neuron possessing all-encompassing wisdom, the limit of all subjectivity. Jokes aside, I hope you have had the privilege of interacting with a wise grandparent. The special individuals who dispense pearls of wisdom without elaborate reasoning. Questioning their wisdom often leads to dead ends, yet you marvel at the profound nuggets of insight they provide. In a sense, they are the “reward models” bestowed upon us by evolution, and we celebrate the enduring wisdom they offer. Long live the wise.

Post-Script:

Keeping my grandmother alive by quoting her on the internet. “If it truly mattered, you would have already thought of it. So, no regrets, trust yourself.”